Abhinav Sharma

abhinavs@umass.edu

About Me

I am a graduate student at UMass. Currently being advised by Prof. Brian Plancher on analytical models for rigid-body dynamics; extending the work on Inverse Dynamics to compute the second-order Partial Derivatives for Forward/Backward Dynamics, commonly required for optimization and motion planning for legged robots. My research interests lie at the intersection of autonomous systems and machine learning.

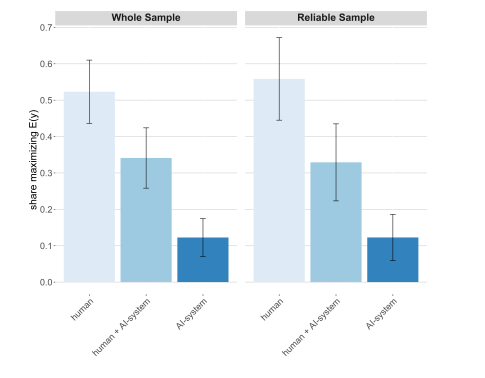

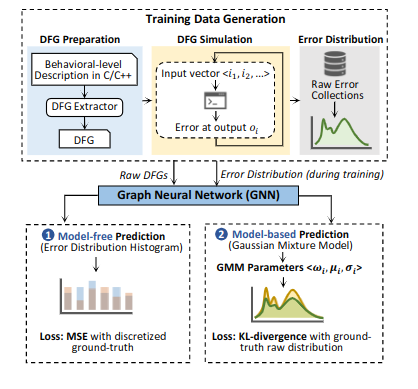

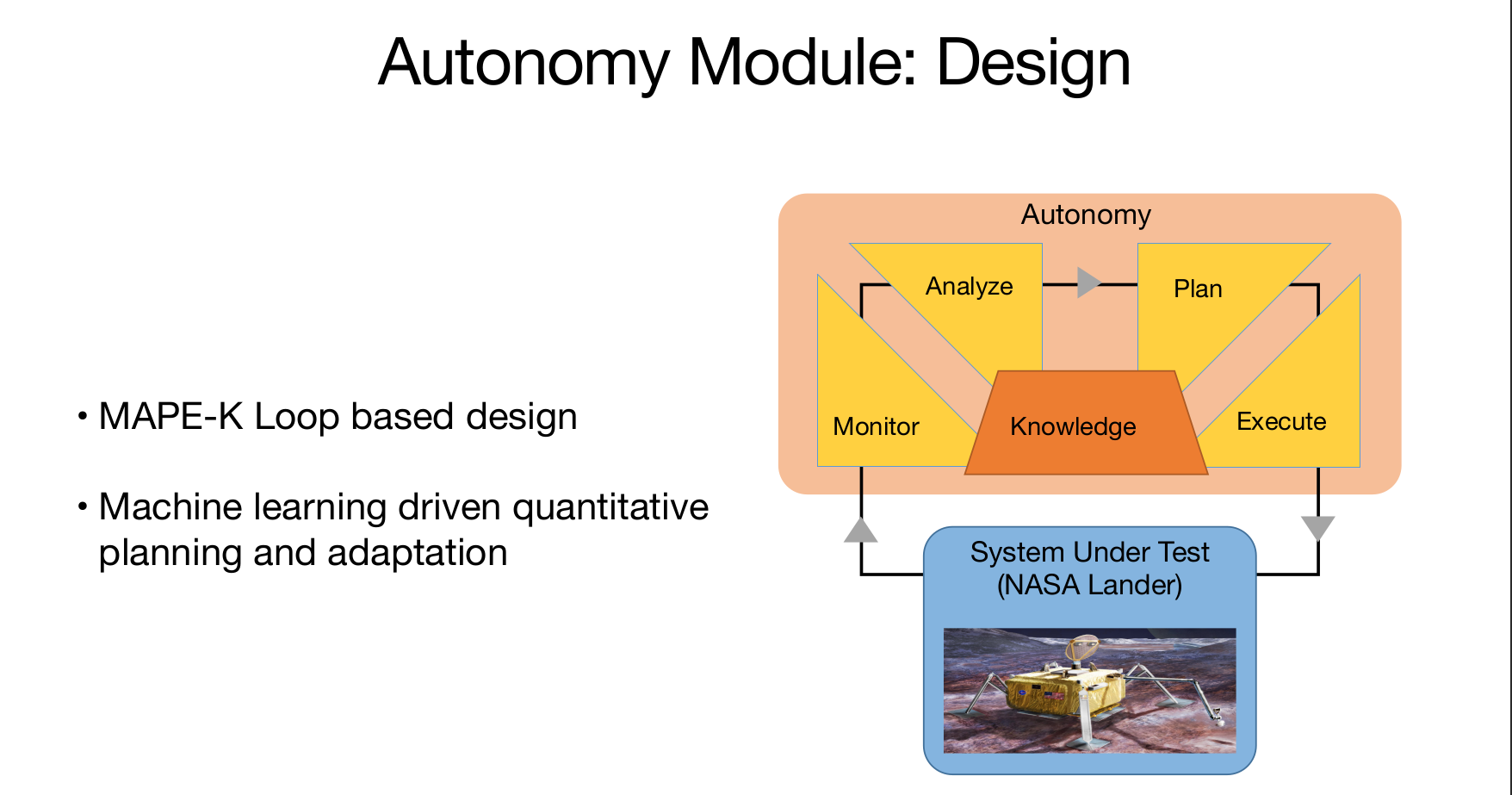

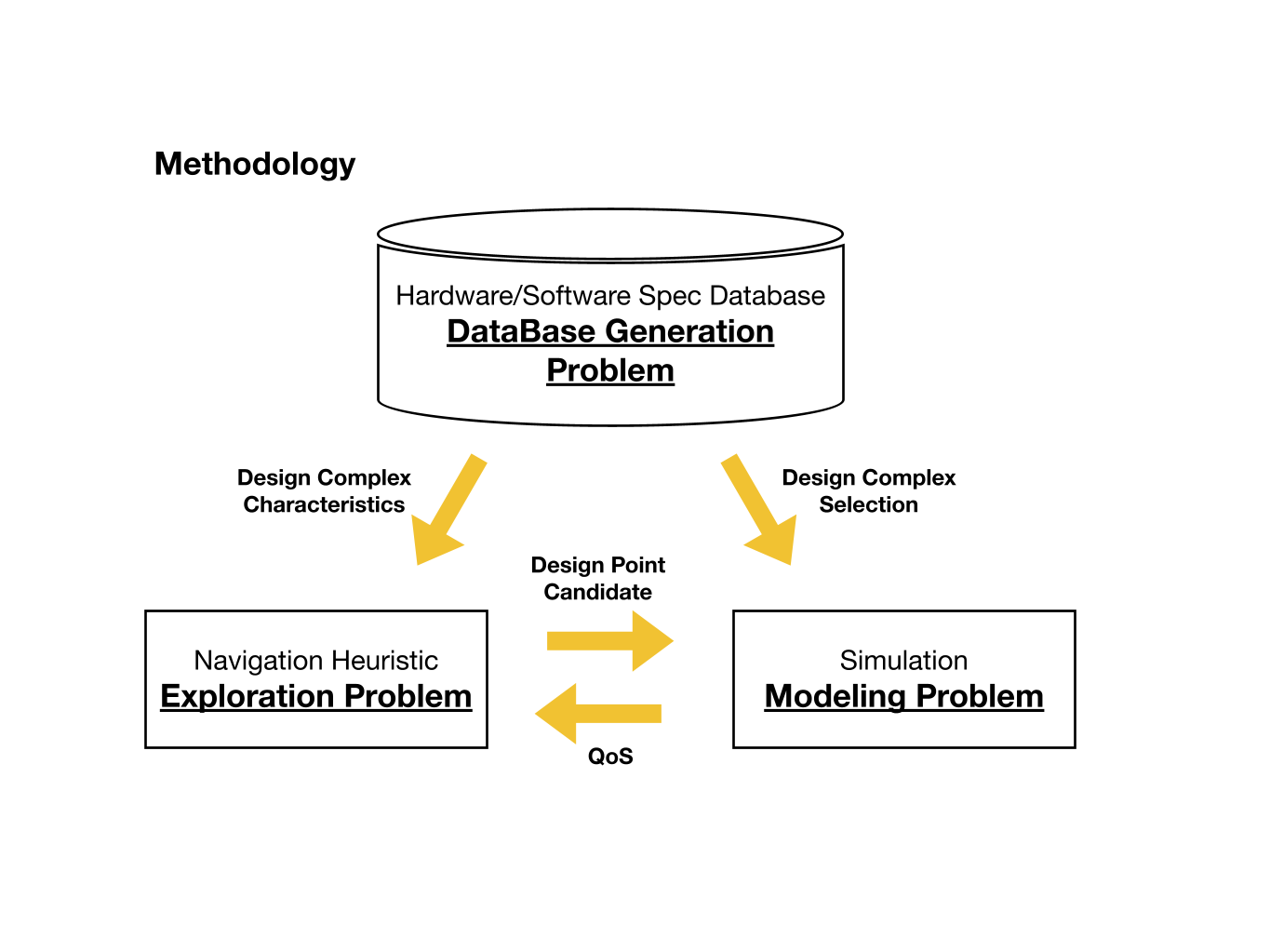

In Jan 2023, I worked at Amazon Prime Video Team, where I worked on the Infrastructure Migration of a Tier-1 service using AWS Fargate and CDK, gaining solid knowledge about Cloud Computing. I spent the summer of 2022 as a Research Specialist at AISys Lab while closely collaborating with Prof. Pooyan Jamshidi (UofSC), Prof. Devashree Tripathy (Postdoc Harvard), and Dr. Behzad Boroujerdian (Facebook Research). Before that, I was a research intern in Sharc Lab (GaTech) under Prof. Callie (Cong) Hao.